Why in the News?

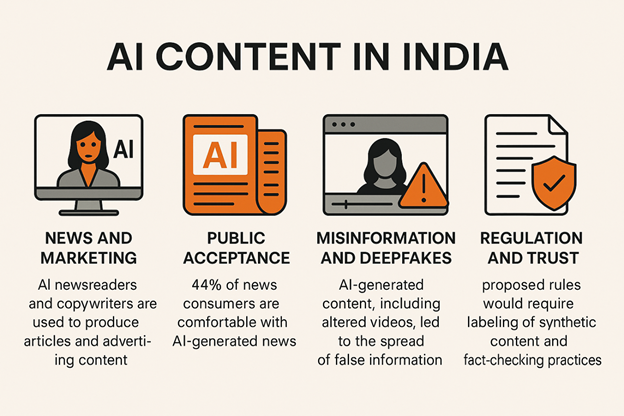

The debate over regulating Artificial Intelligence (AI)-generated content, particularly Synthetic Media or deepfakes, has reached a critical juncture following recent high-profile incidents involving misuse of the technology.

- The Catalyst: Millions of citizens recently viewed a video of a key political figure that was visually and audibly altered using AI, highlighting how sophisticated and near-indistinguishable digital alterations have become. This incident, related to the quick propagation of misleading content, led to an immediate demand for multi-stakeholder action to safeguard the information environment.

- Government Action: In response, the government has swiftly moved to introduce draft amendments to the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021. These proposed changes specifically mandate large platforms (Significant Social Media Intermediaries or SSMIs) to clearly label and track such content.

Context and Background: The Regulatory Landscape

The rise of AI has presented a unique challenge to established media and digital governance rules. The regulatory effort is an attempt to bridge the gap between technological advancement and accountability.

- The Existing Framework: The IT Rules, 2021, primarily focus on due diligence for online intermediaries and content takedown mechanisms. However, they lack specific provisions addressing the unique nature of AI-generated content, where the media itself is fabricated to appear authentic.

- The Core Amendment: The draft rules aim to impose a strict obligation on SSMIs to categorize and clearly label any content that is either Synthetic Media or AI-generated. This shifts the burden of identification and transparency onto the platforms.

- Specific Compliance Norms: The proposed labelling mandate requires precision. For instance, the label must cover at least 10% of the initial duration of the synthetic audio/video or a similar proportion of the sync duration, ensuring the disclosure is not easily missed. Furthermore, longer media (like a 30-minute video) requires a substantial 30-second disclaimer.

Analyzing the Regulatory Gaps and Challenges

While the intent to curb the misuse of deepfakes is clear, the practical implementation of the current framework faces several crucial challenges related to definition, technology, and enforcement.

1. Defining Synthetic Media: The Problem of Over-Inclusion

The current definition of Synthetic Media as anything “artificially or algorithmically created, modified, or generated to appear authentic” is too broad.

- The Risk: This sweeping definition risks penalizing or creating undue compliance burdens for benign uses. For example, a content creator using a simple AI filter or a studio employing AI tools for routine video editing (like automated colour grading) could technically fall under this mandate, despite having no harmful or misleading intent.

- The Necessary Focus: Regulation should pivot its focus from the method of creation (algorithmic) to the potential for harm. The core target must be content that is malicious, deceptive, or intended to cause public harm.

2. Verification and Technological Credibility

The system relies heavily on platforms to verify content, but they currently lack the necessary technological muscle.

- Lack of Standards: Platforms often default to asking the creator for a declaration, but they lack standard tools or protocols to verify the accuracy of that declaration.

- Tool Limitations: Existing proprietary detection systems are inadequate. The article highlights that the success rate of flagging content that should be labeled is often alarmingly low (ranging from 5% to 51% in some studies), indicating the technology is still imperfect and easy to bypass.

- Traceability Issue: There is a critical need to enforce Content Provenance standards, such as those promoted by the C2PA (Content Provenance Authority). This technology embeds cryptographic signatures and metadata (like digital watermarks) into media files to track their origin and modifications, ensuring traceability and accountability.

3. Complexity of Enforcement and Control vs. Creativity

The proposed rules face the challenge of being implemented in a real-world, dynamic digital ecosystem.

- Enforcement Burden: Small creators and large platforms alike could find the stringent rules—such as measuring the exact 10% sync duration—administratively complex and potentially costly.

- The Need for Grading: Treating a simple voice-altered content piece the same as a complex, fully fabricated political video is counterproductive. The lack of nuance stifles creativity and does not aid user comprehension.

Way Forward: Towards a Graded and Targeted Framework

To create a robust and future-proof regulatory framework, the government must incorporate principles of precision, transparency, and graded compliance.

1. Implementing a Graded Labelling System

Instead of a simple “synthetic” tag, a tiered disclosure system provides greater clarity for consumers and allows for targeted intervention.

| Labelling Category | Description and Example | Purpose |

| Fully AI-Generated | Content completely created by AI (e.g., an entirely fabricated news anchor reading a script). | Highest warning; high potential for deception. |

| AI-Altered | Real media fundamentally changed using AI (e.g., a real video with a cloned voice or face-swap). | Focus on material alteration that changes context or identity. |

| AI-Assisted | Content where AI is used for minor enhancements (e.g., background noise reduction, minor aesthetic changes). | Acknowledges AI use without implying malicious intent. |

2. Prioritizing Intent and Harmful Outcomes

Regulatory focus should be narrowed to content that demonstrates a clear malicious intent or carries a significant risk of public harm.

- Example: While an artist using AI to create speculative sci-fi landscapes is benign, a deepfake used to influence an election or commit financial fraud (e.g., voice-cloning a CEO to authorize a transfer) must be the primary focus of penal action. The “precision is the key for a balance between control and creativity.”

3. Strengthening Platform Accountability

Platforms must be held to a higher standard of due diligence, moving beyond mere user-declaration.

- Technological Mandate: Platforms should be mandated to integrate tools that can detect cryptographic watermarks and use AI models trained to spot deepfake signatures.

- Human-in-the-Loop: Closing the “gaps in automated detection systems” requires dedicated human judgment and content moderation teams, especially for high-risk categories like election-related media, to ensure content is accurately assessed, flagged, and, if necessary, removed.

Conclusion: Ensuring Clarity and Trust

The proliferation of Synthetic Media presents an existential challenge to the information ecosystem, demanding clear and firm regulation. The draft amendments to the IT Rules are a necessary foundation, but their effectiveness hinges on moving towards a graded, precise, and technologically informed framework.

By ensuring the final rules provide unambiguous clarity for creators and platforms, while simultaneously empowering users with the necessary transparency (through detailed, prominent labels), the government can uphold the essential goal: maintaining public trust and ensuring that technological advancements do not erode the shared factual reality upon which society operates.

Source: Fine-tune the AI labelling regulations framework – The Hindu

UPSC CSE PYQ

| Year | Question |

| 2023 | What are the main challenges in the governance of Artificial Intelligence (AI) in India? How can they be addressed? |

| 2021 | Discuss the ethical issues involved in the use of Artificial Intelligence in governance. |

| 2020 | How is Artificial Intelligence helping in e-governance? Discuss the challenges of its adoption in public administration. |

| 2019 | What is Artificial Intelligence (AI)? What are India’s initiatives in developing AI? |

| 2018 | Artificial Intelligence is a double-edged sword. Discuss the ethical issues involved. |