Context :

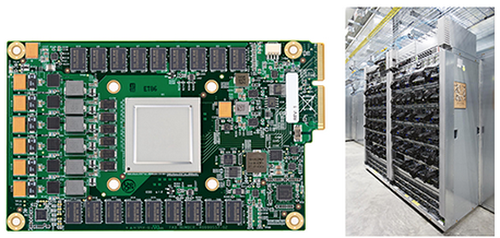

The global AI landscape is transitioning toward domain-specific computing. Google’s Ironwood TPU represents the pinnacle of this shift, offering a specialized alternative to traditional silicon to meet the massive computational demands of Generative AI.

About Tensor Processing Units (Tpus):

- Specialization: A TPU is a custom Application-Specific Integrated Circuit (ASIC) engineered exclusively for machine learning (ML) and deep learning.

- Origin: Developed by Google (2016) to optimize internal services like Search, Translate, and Photos.

- Current Role: Serves as a fundamental pillar in global AI infrastructure, particularly within data centers and cloud computing environments.

Why “Tensors” Matter?

- Mathematical Core: AI models utilize Tensors—multidimensional arrays of numbers—to process vast data and generate predictions.

- Computational Optimization: TPUs are architecturally tuned for high-speed tensor computations through:

- Massive Parallelism: Executing thousands of simultaneous calculations per clock cycle.

- Energy Efficiency: Delivering high performance with significantly lower power consumption compared to GPUs.

- Task-Specific Circuitry: Dedicated hardware paths eliminate redundant operations found in general-purpose chips.

Comparative Framework: CPU Vs. GPU Vs. TPU

| Feature | CPU | GPU | TPU |

| Primary Design | General-purpose logic | Graphics & parallel tasks | AI/ML specific (ASIC) |

| Execution | Serial (one-by-one) | Parallel (many at once) | Matrix-based parallelism |

| Best Use Case | OS tasks, daily apps | Gaming, 3D, diverse ML | LLMs, Deep Learning |

| Power Profile | High flexibility | High consumption | Optimized/Efficient |

Q. With respect to Tensor Processing Units (TPUs), consider the following statements:

1. They are classified as Application-Specific Integrated Circuits (ASICs) designed to optimize deep learning workloads.

2. TPUs are less energy-efficient than Graphics Processing Units (GPUs) when processing massive datasets.

3. The architecture of a TPU is specifically optimized for tensor computations and matrix-heavy operations.

Which of the statements given above are correct?

(a) 1 and 2 only

(b) 2 and 3 only

(c) 1 and 3 only

(d) 1, 2, and 3

Solution

Statement 1 is correct: A TPU is a custom Application-Specific Integrated Circuit (ASIC). Unlike general-purpose chips, ASICs are hardwired for a specific task—in this case, accelerating the stages of machine learning (training and inference).

Statement 2 is incorrect: TPUs are generally more energy-efficient than GPUs for massive AI datasets. Because they are stripped of the hardware required for non-AI tasks (like graphics rendering or general logic), they achieve a significantly higher performance-per-watt ratio.

Statement 3 is correct: The TPU architecture features specialized Matrix Multiplication Units (MXUs). It uses a "systolic array" design that allows it to process tensors (multidimensional arrays) with high throughput and minimal memory access.